Performance of a machine learning model

How do you evaluate?

Evaluating the performance of a machine learning model is a crucial step in the model development process. The evaluation methods depend on the type of problem you are dealing with (classification, regression, clustering, etc.). Here are some common evaluation techniques and metrics used for different types of machine learning problems:

1. Classification

For classification tasks, the goal is to predict a categorical label. Common evaluation metrics include:

Accuracy

- Definition: The proportion of correctly predicted instances out of the total instances.

- Formula: Accuracy = Number of correct predictions / Total number of predictions

Confusion Matrix

- Definition: A matrix that shows the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions.

- Components:

- TP: Correctly predicted positive instances

- TN: Correctly predicted negative instances

- FP: Incorrectly predicted positive instances

- FN: Incorrectly predicted negative instances

Precision, Recall, and F1-Score

- Precision: The proportion of positive predictions that are actually correct.

- Precision = TP / (TP+FP)

- Recall: The proportion of actual positives that are correctly predicted.

- Recall = TP / (TP+FN)

- F1-Score: The harmonic mean of precision and recall.

- F1-Score = 2 x (Precision x Recall) / (Precision+Recall)

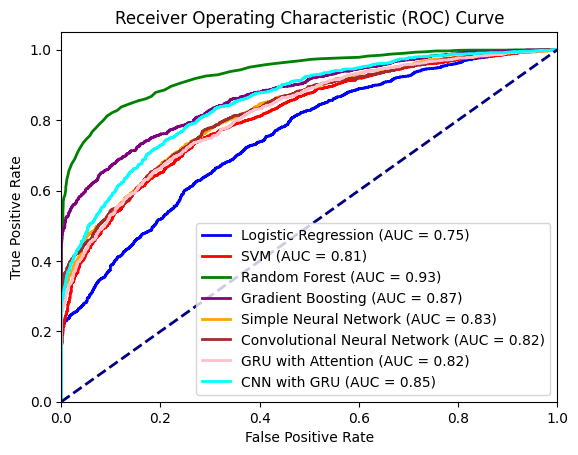

ROC-AUC Score

- ROC (Receiver Operating Characteristic) Curve: A plot of the true positive rate (recall) against the false positive rate. It’s showing the performance of a classification model at all classification thresholds.

- AUC (Area Under Curve): The area under the ROC curve, representing the model’s ability to distinguish between classes.