SMOTE and GAN: Similarities, Differences, and Applications

SMOTE vs GAN

What is SMOTE?

Synthetic Minority Oversampling Technique (SMOTE) is a method designed to address class imbalance in machine learning (ML) and deep learning (DL) models. Class imbalance occurs when one class is significantly underrepresented compared to others, leading models to favor the majority class during training. SMOTE generates synthetic data points for the minority class by interpolating between existing samples. For example, if 20% of a dataset represents diabetic patients and 80% represents non-diabetic patients, SMOTE creates synthetic minority samples to balance the class distribution (Chawla et al., 2002). It is extensively used in ML and DL workflows for tasks like fraud detection and healthcare predictions.

What is GAN?

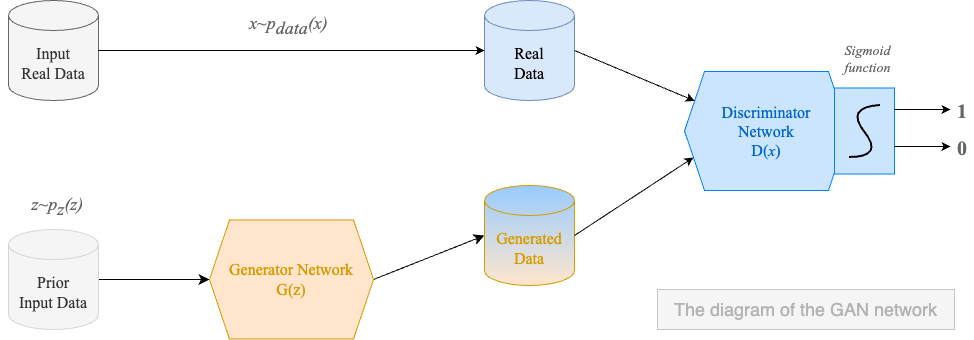

Generative Adversarial Networks (GANs) are advanced neural networks used for generating synthetic data by learning patterns from the original dataset. GANs consist of a generator and a discriminator, where the generator creates synthetic data, and the discriminator differentiates between real and synthetic samples. These components train in an adversarial setup until the generator produces highly realistic synthetic data (Goodfellow et al., 2014). Unlike SMOTE, GANs are particularly effective for non-linear, high-dimensional datasets and have broader applications, including data augmentation and image synthesis.

Similarities Between SMOTE and GAN

- Synthetic Data Generation: Both methods create synthetic data to address class imbalance and enhance the dataset for training.

- Improved Model Performance: By balancing the dataset, both techniques reduce bias toward majority classes, improving predictive accuracy and recall.

- Use in ML/DL: Both are frequently applied to enhance ML and DL models, especially in scenarios with limited minority class data.

Differences Between SMOTE and GAN

| Aspect | SMOTE | GAN |

| Approach | Creates linear synthetic data via interpolation. | Generates realistic, non-linear data via adversarial learning. |

| Complexity | Simple, computationally efficient. | Computationally intensive, requires more resources. |

| Usage | Specifically for ML/DL class balancing. | Broader applications, including data augmentation. |

| Output Quality | Generates simpler synthetic samples. | Produces more diverse and realistic synthetic samples. |

Example and Experimental Analysis

When experimenting on a dataset of 4,240 records for heart failure prediction, both SMOTE and GAN were used to address class imbalance. SMOTE was applied to the minority class using standard ML/DL approaches, while GAN generated synthetic samples using neural networks. The results demonstrated that GAN significantly outperformed SMOTE. With SMOTE, the model achieved an ROC AUC of 0.83, while with GAN, the ROC AUC improved to 0.98. This result highlights the ability of GAN to learn complex, non-linear patterns in the data, producing more realistic and diverse synthetic samples compared to SMOTE’s simpler interpolation-based method.

Conclusion

SMOTE and GAN are both effective for handling class imbalance in ML and DL models, but their effectiveness depends on the dataset and problem complexity. SMOTE is simpler and better suited for linear relationships, while GAN excels in handling non-linear, high-dimensional datasets due to its ability to generate realistic synthetic data. Experimental results showed GAN’s superior performance in heart failure prediction, achieving an ROC AUC of 0.98 compared to 0.83 with SMOTE. This suggests that GAN is a more powerful tool for improving model performance in complex datasets, particularly when higher precision and recall are required.

References

Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. (2002). SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research, 16, 321–357.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … & Bengio, Y. (2014). Generative adversarial nets. Advances in Neural Information Processing Systems, 27, 2672–2680.

Zhu, X., Wang, H., & Liu, Y. (2020). Balancing imbalanced datasets with SMOTE and GAN. IEEE Transactions on Neural Networks and Learning Systems, 31(6), 2043–2055. https://doi.org/10.1109/TNNLS.2019.2961736